Part III: Governance and Oversight – Building the Guardrails

Think of it as the essential rulebook that allows your organisation to innovate with confidence. Without clear rules for how you’ll oversee AI, you open yourself up to all sorts of problems, from big fines

AI IN BUSINESS

Konrad Pazik

6/28/202514 min read

Part III: Governance and Oversight – Building the Guardrails

Your AI Rulebook: A Smart and Safe Way to Manage Risk

Good AI governance isn’t something you do once and forget about; it’s an ongoing commitment to using AI safely and responsibly. Think of it as the essential rulebook that allows your organisation to innovate with confidence. Without clear rules for how you’ll oversee AI, you open yourself up to all sorts of problems, from big fines (the EU AI Act, for example, has penalties up to 6% of a company's global turnover) to biased decisions and damage to your reputation.

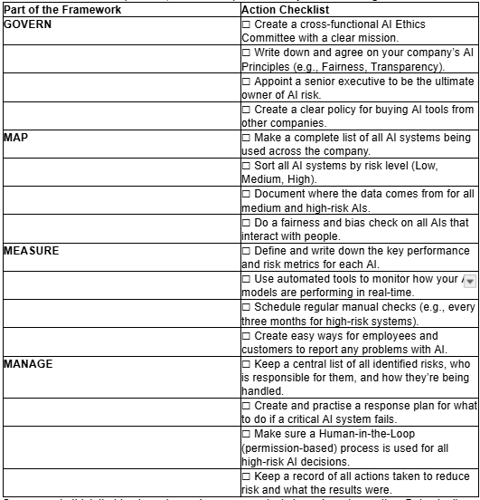

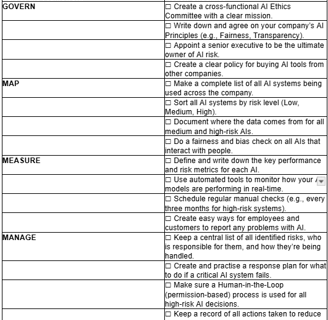

The good news is, you don’t have to start from scratch. A great place to begin is with the AI Risk Management Framework (AI RMF) from the U.S. National Institute of Standards and Technology (NIST). It’s a well-respected guide that’s not a rigid checklist, but a flexible, continuous cycle with four parts. This helps you manage AI risks as a constant loop, adapting as you go. The four parts are:

GOVERN: This is the foundation. It’s about creating the culture and the team that will be in charge of AI safety.

What to Do: The first step is to create a cross-functional AI Governance Committee. This team should bring together people from different areas like legal, tech, HR, and the main business departments. This group’s job is to write the company’s main AI Principles (your core values) and make sure they line up with the company’s goals and how much risk it’s comfortable with. It’s also vital to have a senior leader who is ultimately responsible for AI risk.

MAP: This part is all about understanding what you’re working with. Before you can manage risks, you have to find them.

What to Do: You need to create a list of every single AI system being used in the organisation. This includes "shadow AI"—tools that employees might be using without official approval, as these can be risky. For each AI system, you need to map out what it’s for, what data it uses, and how it might affect people. This helps you sort the AIs into different risk levels (like low, medium, or high) so you know where to focus your attention.

MEASURE: This is where you analyse and track the risks you found in the mapping stage.

What to Do: You need to set up a regular schedule for testing all your AI models, especially before they’re used and then periodically after that. This testing should check for things like accuracy, security, and fairness. You should use a mix of hard numbers (like fairness metrics) and expert judgement to get a full picture of the risks.

MANAGE: This is the action part. It’s about actively dealing with the risks you’ve measured.

What to Do: Based on your risk assessments, you need to decide how to handle the biggest risks. This could mean tweaking the AI’s programming, improving its training data, adding more human oversight, or even deciding that a particular AI is just too risky to use. It also means having a plan for what to do if an AI system goes wrong, and how to safely shut it down when it’s no longer needed.

To make this even more practical, here’s a simple checklist you can use to get started.

Some people think that having rules and governance just slows down innovation. But actually, the opposite is true. When there are no clear rules, teams can be hesitant to try new things because they’re scared of crossing an invisible line. Or, they might rush ahead and create risky "shadow IT" projects that nobody is overseeing. A good governance framework gets rid of that uncertainty. It creates clear, safe, and predictable pathways for innovation. It gives teams the confidence to experiment and build amazing things, because they know they’re working within safe boundaries. In this way, good governance is what truly unleashes innovation.

The AI Ethics Council: Your Company’s Conscience

At the very heart of a great AI governance programme is a dedicated team, which you might call an AI Ethics Council or a Responsible AI Committee. This council is the brain of the whole operation. It provides the vision, the oversight, and the accountability to turn good intentions into real-world actions. Its success doesn’t depend on its name, but on its clear purpose, its diverse team, and its real authority to make a difference.

The council needs a clear mission statement, or charter, that spells out what it’s there to do. This should include a few key jobs:

Giving Strategic Advice: The council should be a trusted advisor to the company’s top leaders and board of directors, helping them understand the ethical side of the company’s AI plans.

Creating and Upholding Policies: It’s the council’s job to write, approve, and make sure everyone follows the company’s AI ethics rules and principles, keeping them up-to-date as technology changes.

Reviewing High-Risk Projects: The council acts as a crucial checkpoint for any AI project that’s been identified as high-risk. It should have the power to review these projects, ask for changes, and even recommend that a project be stopped if it’s too risky.

Handling Problems: When a serious problem or ethical dilemma involving AI comes up, the council is the place it gets escalated to for a final decision.

Educating and Inspiring: The council should be a champion for AI ethics across the whole organisation, making sure everyone understands the role they play in using AI responsibly.

To do all this, the council can’t just be a small group of lawyers or tech experts. Its strength comes from having a mix of people with different skills and perspectives. A great council should have senior people from:

Top Leadership (A C-Suite Sponsor): Having a top executive on board, like a Chief AI Officer or Chief Technology Officer, gives the council real authority and ensures its advice is taken seriously.

Legal and Compliance: To provide expert knowledge on all the fast-changing AI laws and regulations.

Technology and Data Science: To give a deep, technical understanding of how AI models actually work.

Human Resources: To be the voice of the employees, advising on how AI will affect jobs and wellbeing.

Key Business Departments: To make sure the ethical rules are practical and make sense for how AI is actually being used day-to-day.

Ethics or Privacy Officer: A dedicated expert who can provide specialist guidance on ethical thinking and data protection.

Outside Experts (Optional but a great idea): Bringing in people from outside the company, like university professors or ethics experts, can offer fresh perspectives and stop the group from only thinking in one way.

How the council works is also vital. Its authority needs to be clearly defined. Is it just an advisory group, or can it actually stop a project?. A good model is one where the council’s recommendations have to be followed unless they are formally overruled by the CEO, which makes it a big deal to ignore their advice. The council also needs proper resources, like a budget and access to all the information it needs to do its job. Finally, it needs a clear decision-making process, with rules for how votes are taken and how all decisions are carefully recorded so there’s a clear trail of how and why decisions were made.

Perhaps the most important, and often forgotten, job of this council is to be an organisational "translator." AI risk means different things to different people. A data scientist sees it as a technical problem, a lawyer sees it as a legal risk, and an HR leader sees it as an employee issue. The AI Ethics Council brings all these experts into one room to talk to each other. The lawyer has to explain data privacy in a way the data scientist can turn into a technical fix. The data scientist has to explain fairness in a way a business leader can understand as a market risk. This act of translation is essential. It turns big ideas into practical actions and brings everyone together to work on a single, shared strategy for managing AI risk. The council’s greatest achievement isn’t just the decisions it makes, but the shared understanding it creates across the entire organisation.

The AI Transparency Report: Building Trust by Being Open

In today’s world, where AI is everywhere but often feels like a mystery, being open and honest is no longer just a nice-to-have; it’s a must-do. One of the best ways for an organisation to show it’s serious about using AI responsibly is by publishing a regular AI Transparency Report. This is a public document that explains how the company is using AI. It’s a powerful way to build trust by moving from just saying the right things to showing you’re actually doing them.

A report like this is useful for so many people. For customers and the public, it helps to open up the AI "mystery box," building the confidence needed to use AI-powered products and services. For regulators, it’s a way of showing you’re making a real effort to follow the rules, which can lead to a better relationship with them. For investors, it shows that the company has mature and responsible leadership, which is increasingly important. And for employees, it backs up the company’s promises about its ethical culture, helping to calm any worries they might have.

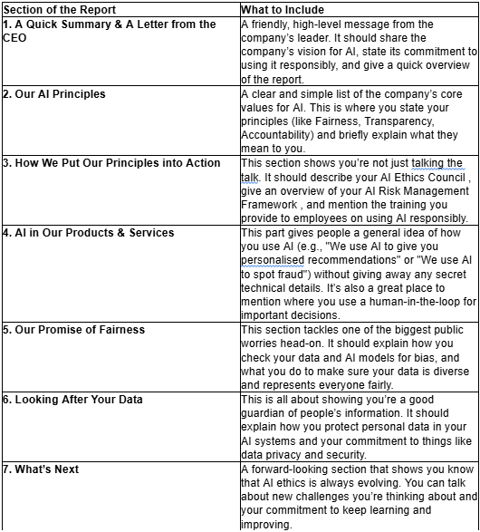

So, what should go into a great AI Transparency Report?

To make the report really effective, it’s important to use simple, accessible language and avoid technical jargon. Using pictures and charts can also make it much easier to understand. The report should also be honest, talking openly about challenges and even mistakes. This builds much more credibility than a report that sounds like a perfect marketing brochure. Finally, publishing the report regularly, like once a year, maybe even ever 6 months, shows that this is an ongoing commitment.

In our fast-moving world, even a yearly report can feel a bit out of date. The most forward-thinking companies are now creating dynamic, digital "Transparency Hubs" on their websites. This turns the report from a static document into a living, breathing resource that’s always up-to-date. It allows people to see a high-level summary, but also click through to get more detail if they’re interested, like reading a specific policy or a case study. This is a fantastic way to show a deep and genuine commitment to being open and to position your organisation as a true leader in responsible AI.

Works cited

1. Economic potential of generative AI - McKinsey, https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier 2. AI in the workplace: A report for 2025 - McKinsey, https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/superagency-in-the-workplace-empowering-people-to-unlock-ais-full-potential-at-work 3. AI Disruption: 9 in 10 Workers Fear Job Loss to Automation - Resume-Now, https://www.resume-now.com/job-resources/careers/ai-disruption-report 4. EY research shows most US employees feel AI anxiety, https://www.ey.com/en_us/newsroom/2023/12/ey-research-shows-most-us-employees-feel-ai-anxiety 5. Assessing the Effect of Artificial Intelligence Anxiety on Turnover Intention: The Mediating Role of Quiet Quitting in Turkish Small and Medium Enterprises, https://pmc.ncbi.nlm.nih.gov/articles/PMC11939379/ 6. (PDF) AI Anxiety: A Comprehensive Analysis of Psychological Factors and Interventions, https://www.researchgate.net/publication/374092896_AI_Anxiety_A_Comprehensive_Analysis_of_Psychological_Factors_and_Interventions 7. Why human-centric strategies are vital in the AI era | World Economic Forum, https://www.weforum.org/stories/2025/01/leading-with-purpose-why-human-centric-strategies-are-vital-in-the-ai-era/ 8. Bain at WEF 2025: Implementation Gap as AI Investment Surges | Technology Magazine, https://technologymagazine.com/articles/bain-at-wef-2025-implementation-gap-as-ai-investment-surges 9. AI Adoption Challenges and How to Navigate Them - Bizagi, https://www.bizagi.com/en/blog/ai-adoption-challenges 10. The Importance of Change Management in AI Transformation - Consultport, https://consultport.com/business-transformation/importance-of-change-management-in-ai-transformation/ 11. 9 Ethical AI Principles For Organizations To Follow - Cogent Infotech, https://www.cogentinfo.com/resources/9-ethical-ai-principles-for-organizations-to-follow 12. What Is AI Transparency? - IBM, https://www.ibm.com/think/topics/ai-transparency 13. What is AI compliance? Ensuring Trust and Ethical Practices - TalentLMS, https://www.talentlms.com/blog/ai-compliance-considerations/ 14. Can AI Be a Force for Good in Ethics and Compliance? - GAN Integrity, https://www.ganintegrity.com/resources/blog/can-ai-be-a-force-for-good-in-ethics-and-compliance/ 15. AI Ethics in the Enterprise: Risk Management and Value Creation - AI Today, https://aitoday.com/ai-models/ai-ethics-in-the-enterprise-risk-management-and-value-creation/ 16. Post #5: Reimagining AI Ethics, Moving Beyond Principles to ..., https://www.ethics.harvard.edu/blog/post-5-reimagining-ai-ethics-moving-beyond-principles-organizational-values 17. Steps to Build an AI Ethics Framework - A&MPLIFY, https://www.a-mplify.com/insights/charting-course-ai-ethics-part-3-steps-build-ai-ethics-framework 18. Ethics of Artificial Intelligence | UNESCO, https://www.unesco.org/en/artificial-intelligence/recommendation-ethics 19. AI principles | OECD, https://www.oecd.org/en/topics/ai-principles.html 20. Examples of Responsible AI and Its Real-World Applications - Convin, https://convin.ai/blog/responsible-ai 21. What is AI transparency? A comprehensive guide - Zendesk, https://www.zendesk.com/blog/ai-transparency/ 22. Responsible AI: Key Principles and Best Practices - Atlassian, https://www.atlassian.com/blog/artificial-intelligence/responsible-ai 23. Artificial Intelligence Ethics Framework for the Intelligence Community - INTEL.gov, https://www.intelligence.gov/ai/ai-ethics-framework 24. The importance of human-centered AI | Wolters Kluwer, https://www.wolterskluwer.com/en/expert-insights/the-importance-of-human-centered-ai 25. Human-Centered AI: What Is Human-Centric Artificial Intelligence?, https://online.lindenwood.edu/blog/human-centered-ai-what-is-human-centric-artificial-intelligence/ 26. Understanding Explainable AI: Key Concepts of Transparency and Explainability - dida Machine Learning, https://dida.do/ai-explainability-and-transparency-what-is-explainable-ai-dida-ml-basics 27. AI transparency vs. AI explainability: Where does the difference lie? - TrustPath, https://www.trustpath.ai/blog/ai-transparency-vs-ai-explainability-where-does-the-difference-lie 28. How to Create AI Transparency and Explainability to Build Trust - Shelf.io, https://shelf.io/blog/ai-transparency-and-explainability/ 29. Addressing Transparency & Explainability When Using AI Under Global Standards - Mayer Brown, https://www.mayerbrown.com/-/media/files/perspectives-events/publications/2024/01/addressing-transparency-and-explainability-when-using-ai-under-global-standards.pdf%3Frev=8f001eca513240968f1aea81b4516757 30. Ethical AI Uncovered: 10 Fundamental Pillars of AI Transparency - Shelf.io, https://shelf.io/blog/ethical-ai-uncovered-10-fundamental-pillars-of-ai-transparency/ 31. Building trust in AI: A practical approach to transparency - OECD.AI, https://oecd.ai/en/wonk/anthropic-practical-approach-to-transparency 32. Responsible AI Transparency Report | Microsoft, https://cdn-dynmedia-1.microsoft.com/is/content/microsoftcorp/microsoft/msc/documents/presentations/CSR/Responsible-AI-Transparency-Report-2024.pdf 33. Biases in Artificial Intelligence: How to Detect and Reduce Bias in AI ..., https://onix-systems.com/blog/ai-bias-detection-and-mitigation 34. AI Governance Guide: Implementing Ethical AI Systems - Kong Inc., https://konghq.com/blog/learning-center/what-is-ai-governance 35. The Ultimate Guide to AI Transparency - Number Analytics, https://www.numberanalytics.com/blog/ultimate-guide-to-ai-transparency 36. The Challenges of Artificial Intelligence Adoption and Regulatory Compliance - Ethico, https://ethico.com/blog/the-challenges-of-artificial-intelligence-adoption-and-regulatory-compliance/ 37. AI Bias Mitigation: Detecting Bias in AI Models and Generative Systems - Sapien, https://www.sapien.io/blog/bias-in-ai-models-and-generative-systems 38. Responsible AI Development: Bias Detection and Mitigation Strategies - Agiliway, https://www.agiliway.com/responsible-ai-development-bias-detection-and-mitigation-strategies/ 39. AI Bias 101: Understanding and Mitigating Bias in AI Systems - Zendata, https://www.zendata.dev/post/ai-bias-101-understanding-and-mitigating-bias-in-ai-systems 40. Mitigating Bias in Artificial Intelligence - Berkeley Haas, https://haas.berkeley.edu/wp-content/uploads/UCB_Playbook_R10_V2_spreads2.pdf 41. Guide to Optimizing Human AI Collaboration Systems - DeepScribe, https://www.deepscribe.ai/resources/optimizing-human-ai-collaboration-a-guide-to-hitl-hotl-and-hic-systems 42. Human-in-the-loop - Wikipedia, https://en.wikipedia.org/wiki/Human-in-the-loop 43. Human-In-The-Loop: What, How and Why | Devoteam, https://www.devoteam.com/expert-view/human-in-the-loop-what-how-and-why/ 44. What Is Human-in-the-Loop? - MetaSource, https://www.metasource.com/document-management-workflow-blog/what-is-human-in-the-loop/ 45. How Can Human-in-the-loop Help In Business Growth? - Opporture, https://www.opporture.org/thoughts/how-can-hitl-help-in-business-growth/ 46. What is Human-in-the-Loop (HITL) in AI & ML - Google Cloud, https://cloud.google.com/discover/human-in-the-loop 47. Human-in-the-Loop (HITL) AI: What It Is, Why It Matters, and How It Works | YourGPT, https://yourgpt.ai/blog/general/human-in-the-loop-hilt 48. Human in the Loop: Keeping Up-to-Date with the AI Landscape - WSI World, https://www.wsiworld.com/blog/human-in-the-loop-keeping-up-to-date-with-the-ai-landscape 49. Reskilling in the Age of AI: How to Future-Proof Your Workforce, https://www.workhuman.com/blog/reskilling-in-the-age-of-ai/ 50. How to Keep Up with AI Through Reskilling - Professional & Executive Development, https://professional.dce.harvard.edu/blog/how-to-keep-up-with-ai-through-reskilling/ 51. Upskilling the Workforce: Strategies for 2025 Success - Aura Intelligence, https://blog.getaura.ai/upskilling-the-workforce-guide 52. The reskilling roadmap: Navigating evolving human, AI roles in the workplace, https://www.chieflearningofficer.com/2025/01/17/the-reskilling-roadmap-navigating-evolving-human-ai-roles-in-the-workplace/ 53. Reskilling and Upskilling Your Workforce Through AI & Automation, https://www.customercontactweek.com/team-ccw/blog/reskilling-upskilling/ 54. AI Adoption: Driving Change With a People-First Approach - Prosci, https://www.prosci.com/blog/ai-adoption 55. 10 Common Challenges to AI Adoption and How to Avoid Them - Naviant, https://naviant.com/blog/ai-challenges-solved/ 56. AI in Change Management: Early Findings - Prosci, https://www.prosci.com/blog/ai-in-change-management-early-findings 57. Using Change Management To Drive Effective AI Adoption In Marketing Organizations, https://nowspeed.com/blog/using-change-management-to-drive-effective-ai-adoption-in-marketing-organizations/ 58. What is Human-Centric AI? An Empathetic Approach to Tech [2024] - Asana, https://asana.com/resources/what-is-human-centric-ai 59. AI Change Management – Tips To Manage Every Level of Change | SS&C Blue Prism, https://www.blueprism.com/resources/blog/ai-change-management/ 60. How to Use Human-Centric AI in the Workplace: The Ultimate Guide - ClickUp, https://clickup.com/blog/human-centric-ai/ 61. Change Management: The Hidden Hurdle of AI Adoption | Blog - Artos AI, https://www.artosai.com/blog/change-management-the-hidden-hurdle-of-ai-adoption 62. AI Governance Frameworks: Guide to Ethical AI Implementation - Consilien, https://consilien.com/news/ai-governance-frameworks-guide-to-ethical-ai-implementation 63. AI governance: What it is, why it matters, and how to implement it | OneAdvanced, https://www.oneadvanced.com/resources/a-guide-to-mastering-ai-governance-for-business-success/ 64. Playbook - NIST AIRC - National Institute of Standards and Technology, https://airc.nist.gov/airmf-resources/playbook/ 65. An AI Risk Management Framework for Enterprises - Galileo AI, https://galileo.ai/blog/ai-risk-management-strategies 66. AI Governance Framework: Implement Responsible AI in 8 Steps - FairNow's AI, https://fairnow.ai/free-ai-governance-framework/ 67. AI Governance: Key Principles for Responsible Innovation - Brainsell, https://www.brainsell.com/blog/ai-governance-key-principles-for-responsible-innovation/ 68. AI Governance: Develop in 4 Steps and Mitigate Risks - Neudesic, https://www.neudesic.com/blog/four-steps-ai-governance-framework/ 69. Bridging the AI Risk Governance Gap: A Compliance-Focused Playbook - RadarFirst, https://www.radarfirst.com/blog/ai-risk-governance-compliance-playbook/ 70. AI Risk Management Playbook - Medium, https://medium.com/@tahirbalarabe2/ai-risk-management-playbook-abd0aae673ed 71. Generative AI Risk Management Playbook | Publicis Sapient, https://www.publicissapient.com/insights/generative-ai-risk-management-playbook 72. How to Form an AI Ethics Board for Responsible AI Development - Shelf.io, https://shelf.io/blog/how-to-form-an-ai-ethics-board-for-responsible-ai-development/ 73. (PDF) How to design an AI ethics board - ResearchGate, https://www.researchgate.net/publication/378239309_How_to_design_an_AI_ethics_board 74. Ten steps to creating an AI policy | Corporate Governance | CGI, https://www.thecorporategovernanceinstitute.com/insights/guides/creating-an-ai-policy/ 75. Principles for Responsible AI Innovation Organizational Roadmap - UNICRI, https://unicri.org/sites/default/files/2024-02/03_Organizational_Roadmap_Feb24.pdf 76. How do we make AI more human-centric? - KPMG International, https://kpmg.com/xx/en/our-insights/ai-and-technology/how-do-we-make-ai-more-human-centric.html 77. 5 Ways Companies are Incorporating AI Ethics - Drata, https://drata.com/blog/ways-companies-are-incorporating-ai-ethics 78. Best Practice: Transparency in AI Use - Generative AI Solutions Hub, https://genai.illinois.edu/best-practice-transparency-in-ai-use/ 79. AI Report Generator - Annual Report Design (+Templates) - Storydoc, https://www.storydoc.com/report-maker 80. How Geisinger uses robotics and AI to improve care, find value, https://www.ama-assn.org/practice-management/digital-health/how-geisinger-uses-robotics-and-ai-improve-care-find-value 81. Leveraging Technology and Value-Based Care | Case Study: Geisinger Health System - American Medical Association, https://www.ama-assn.org/system/files/future-health-case-study-geisinger.pdf 82. Geisinger uses AI to boost its value-based care efforts | American Medical Association, https://www.ama-assn.org/practice-management/payment-delivery-models/geisinger-uses-ai-boost-its-value-based-care-efforts 83. Artificial Intelligence - Steele Institute for Health Innovation - Geisinger, https://www.geisinger.org/innovation-steele-institute/innovative-partners/ai 84. 10 Case Studies: Humans + AI in Professional Services - Humans + AI, https://humansplus.ai/insights/10-case-studies-humans-ai-in-professional-services/ 85. Bain & Company enhances leadership of its digital practices—AI, Insights, and Solutions and Enterprise Technology—amid high client demand, https://www.bain.com/about/media-center/press-releases/2024/bain--company-enhances-leadership-of-its-digital-practicesai-insights-and-solutions-and-enterprise-technologyamid-high-client-demand/ 86. KPMG Advances AI Integration in KPMG Clara Smart Audit Platform, https://kpmg.com/us/en/media/news/kpmg-clara-smart-audit-platform.html 87. KPMG Further Integrates AI Agents Into Its Audit Platform Clara - The New York State Society of CPAs, https://www.nysscpa.org/news/publications/the-trusted-professional/article/kpmg-further-integrates-ai-agents-into-its-audit-platform-clara-042425 88. How KPMG is using AI to revamp their audit practice - CFO.com, https://www.cfo.com/news/how-kpmg-thomas-mackenzie-is-using-clara-ai-to-revamp-their-audit-practice-/748316/ 89. KPMG Global AI in Finance Report, https://assets.kpmg.com/content/dam/kpmg/dk/pdf/dk-2024/december/dk-global-ai-in-finance-report.pdf 90. Agentic AI will revolutionize business in the cognitive era - The World Economic Forum, https://www.weforum.org/stories/2025/06/cognitive-enterprise-agentic-business-revolution/ 91. AI and the Future of Work | IBM, https://www.ibm.com/think/insights/ai-and-the-future-of-work 92. Reinventing the manager's role for the future of work with AI and human collaboration, https://action.deloitte.com/insight/4475/reinventing-the-managers-role-for-the-future-of-work-with-ai-and-human-collaboration 93. Human-AI Collaboration in the Workplace - SmythOS, https://smythos.com/developers/agent-development/human-ai-collaboration/ 94. 25 Ways AI Will Change the Future of Work - Workday Blog, https://blog.workday.com/en-us/25-ways-ai-will-change-the-future-of-work.html 95. Exploring the Future of Work: What Skills Will Be Essential in 2030? - Acacia University, https://acacia.edu/blog/exploring-the-future-of-work-what-skills-will-be-essential-in-2030/ 96. How we can elevate uniquely human skills in the age of AI - The World Economic Forum, https://www.weforum.org/stories/2025/01/elevating-uniquely-human-skills-in-the-age-of-ai/ 97. AI is shifting the workplace skillset. But human skills still count - The World Economic Forum, https://www.weforum.org/stories/2025/01/ai-workplace-skills/ 98. Future of Jobs Report 2025: The jobs of the future – and the skills you need to get them, https://www.weforum.org/stories/2025/01/future-of-jobs-report-2025-jobs-of-the-future-and-the-skills-you-need-to-get-them/ 99. WEF 2025: Transforming the Workforce in the Age of AI - Digital Robots, https://www.digital-robots.com/en/news/wef-2025-la-transformacion-de-la-fuerza-laboral-en-la-era-de-la-ia 100. 4 ways to enhance human-AI collaboration in the workplace - The World Economic Forum, https://www.weforum.org/stories/2025/01/four-ways-to-enhance-human-ai-collaboration-in-the-workplace/ 101. Reshaping work in the Intelligent Age: A future-proof workforce | World Economic Forum, https://www.weforum.org/stories/2025/01/reshaping-work-in-the-intelligent-age-building-a-future-proof-workforce/ 102. AGI: Enough with the hype; let's ask the important questions - Cognizant, https://www.cognizant.com/us/en/insights/insights-blog/risks-and-benefits-of-artificial-general-intelligence 103. Artificial General Intelligence - The Decision Lab, https://thedecisionlab.com/reference-guide/computer-science/artificial-general-intelligence 104. The Implications of AGI for Corporate Governance - Lakefield Drive, https://lakefielddrive.co/f/the-implications-of-agi-for-corporate-governance 105. AGI could drive wages below subsistence level | Epoch AI, https://epoch.ai/gradient-updates/agi-could-drive-wages-below-subsistence-level 106. AI Will Transform the Global Economy. Let's Make Sure It Benefits Humanity., https://www.imf.org/en/Blogs/Articles/2024/01/14/ai-will-transform-the-global-economy-lets-make-sure-it-benefits-humanity 107. Artificial General Intelligence and the End of Human Employment: The Need to Renegotiate the Social Contract - arXiv, https://arxiv.org/html/2502.07050v1 108. A Framework for Human-Centric AI-First Teaching - AACSB, https://www.aacsb.edu/insights/articles/2025/02/a-framework-for-human-centric-ai-first-teaching 109. AI Ethics Framework: Building Relationships for Managing Advanced AI Systems, https://www.businesstechweekly.com/technology-news/ai-ethics-framework-building-relationships-for-managing-advanced-ai-systems/

Connect

Empowering local businesses through tailored AI solutions.

Innovate

Transform

07543 809686

© 2025. All rights reserved.