Attacks Against Generative AI Systems

Gen AI Attacks

ETHICS & GOVERNANCE

3/28/20252 min read

Attacks Against Generative AI Systems: What You Need to Know

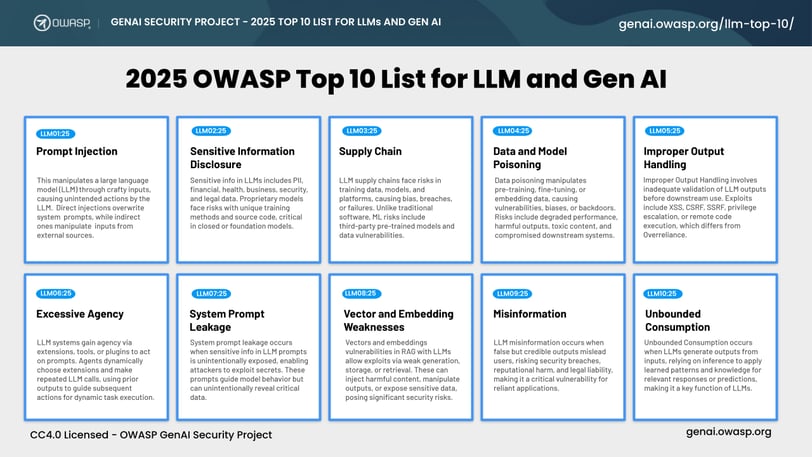

Generative AI systems are transforming industries, but with great potential comes significant risk. The Open Worldwide Application Security Project (OWASP) has identified several critical vulnerabilities that highlight the need for robust safeguards in Data and AI systems. Let’s break these down in a way that’s easy to understand and actionable.

1. Prompt Injections

Imagine someone sneaking malicious commands into an AI system. This is what prompt injection is all about. By feeding harmful inputs, attackers can manipulate the system into generating unauthorised or harmful responses. It’s like tricking a helpful assistant into doing something it shouldn’t.

2. Insecure Output Handling

AI systems generate a lot of data, but if this data isn’t properly sanitised or handled, it can lead to vulnerabilities like cross-site scripting or even leaking sensitive information. Think of it as leaving a door unlocked for hackers to walk right in.

3. Training Data Poisoning

AI systems learn from data, but what happens if that data is tampered with? Training data poisoning involves attackers corrupting the data used to train AI models, causing them to behave unpredictably or make harmful decisions.

4. Denial of Service (DoS)

AI systems can be overwhelmed by resource-intensive processes, making them unavailable to legitimate users. This is known as a denial-of-service attack, where attackers flood the system to bring it to a standstill.

5. Supply Chain Vulnerabilities

AI systems rely on a complex lifecycle of components and services. If any part of this supply chain is compromised, it can open the door to security attacks. It’s like a weak link in a chain that breaks under pressure.

6. Sensitive Data Leakage or Information Disclosure

AI systems often handle sensitive information, but flaws in data handling or privacy controls can lead to unintentional exposure. This is a major concern, especially when dealing with personal or confidential data.

7. Insecure Plugin Design

Plugins can extend the functionality of AI systems, but if they’re not designed securely, they can introduce vulnerabilities. Insecure inputs or insufficient access controls can make these plugins a target for attackers.

8. Excessive Agency

Sometimes, AI systems are given too much autonomy or functionality, which can lead to unintended consequences. This is known as excessive agency, where the system’s permissions or capabilities go beyond what’s necessary.

9. Overreliance on AI Systems

It’s easy to trust AI systems, but overreliance can be dangerous. Without proper oversight, these systems can disseminate inappropriate or harmful content, leading to serious consequences.

10. Model Theft

AI models are valuable intellectual property, but they’re not immune to theft. Model theft involves unauthorised access, copying, or exfiltration of proprietary AI models, which can undermine a company’s competitive edge.

Why This Matters

Generative AI systems are powerful tools, but they’re not invincible. By understanding these vulnerabilities, we can take proactive steps to safeguard AI systems and ensure they’re used responsibly.

At Wiser Tide, we believe in empowering you with the knowledge to navigate the evolving world of AI confidently. Whether you’re a business leader, developer, or simply curious about AI, staying informed is the first step to staying secure.

Connect

Empowering local businesses through tailored AI solutions.

Innovate

Transform

07543 809686

© 2025. All rights reserved.